Decision tree induction is the learning of decision trees from class-labeled training tuples.A decision tree is a flowchart-like tree structure, where each internal node (nonleaf node) denotes a test on an attribute, each branch represents an outcome of the test, and each leaf

node (or terminal node) holds a class label. The top most node in a tree is theroot node.

Fig. A decision tree for the concept buys computer

How are decision trees used for classification?

Given a tuple, X, for which the associated class label is unknown, the attribute values of the tuple are tested against the decision tree. A path is traced from the root to a leaf node, which holds the class prediction for that tuple. Decision trees can easily be converted to classification rules.

Why are decision tree classifiers so popular?

The construction of decision tree classifiers does not require any domain knowledge or parameter setting, and therefore is appropriate for exploratory knowledge discovery. Decision trees can handle high dimensional data. Their representation of acquired knowledge in tree form is intuitive and generally easy to assimilate by humans. The learning and classification steps of decision tree induction are simple and fast. In general, decision tree classifiers have good accuracy.

4.3.1. Decision Tree Induction:

Decision tree algorithm known as ID3 (Iterative Dichotomiser) and it is expanded on earlier work on concept learning systems.

In later presented C4.5 (a successor of ID3), which became a benchmark to which newer supervised learning algorithms are often compared.

Classification and Regression Trees (CART), which described the generation of binary decision trees. ID3 and CART were invented independently of one another at around the same time, yet follow a similar approach for learning decision trees from training tuples.

ID3, C4.5, and CART adopt a greedy (i.e., nonbacktracking) approach in which decision trees are constructed in a top-down recursive divide-and-conquer manner. Most algorithms for decision tree induction also follow such a top-down approach, which starts with a training set of tuples and their associated class labels. The training set is recursively partitioned into smaller subsets as the tree is being built. A basic decision tree algorithm is summarized here.

Fig. Basic algorithm for inducing a decision tree from training tuples.

The strategy is as follows.

The algorithm is called with three parameters: D, attribute list, andAttribute selection method.We refer to D as a data partition. Initially, it is the complete set of training tuples and their associated class labels. The parameter attribute list is a list of attributes describing the tuples. Attribute selection method specifies a heuristic procedure for selecting the attribute that “best” discriminates the given tuples according to class. This procedure employs an attribute selection measure, such as information gain or the gini index. Whether the tree is strictly binary is generally driven by the attribute selection measure. Some attribute selection measures, such as the gini index, enforce the resulting tree to be binary. Others, like information gain, do not, therein allowing multiway splits (i.e., two or more branches to be grown from a node).

The tree starts as a single node, N, representing the training tuples inD (step 1).

If the tuples in D are all of the same class, then node N becomes a leaf and is labeled with that class (steps 2 and 3). Note that steps 4 and 5 are terminating conditions. All of the terminating conditions are explained at the end of the algorithm.

Otherwise, the algorithm calls Attribute selection method to determine the splitting criterion. The splitting criterion tells us which attribute to test at node N by determining the “best” way to separate or partition the tuples in Dinto individual classes (step 6). The splitting criterion also tells us which branches to grow from node N with respect to the outcomes of the chosen test. More specifically, the splitting criterion indicates the splitting attribute and may also indicate either a split-point or a splitting subset. The splitting criterion is determined so that, ideally, the resulting partitions at each branch are as “pure” as possible.

A partition is pure if all of the tuples in it belong to the same class. In other words, if we were to split up the tuples in D according to the mutually exclusive outcomes of the splitting criterion, we hope for the resulting partitions to be as pure as possible.

The node N is labeled with the splitting criterion, which serves as a test at the node (step 7). A branch is grown from node N for each of the outcomes of the splitting criterion. The tuples in D are partitioned accordingly (steps 10 to 11). There are three possible scenarios, as illustrated in Figure 6.4. Let A be the splitting attribute. A has v distinct values, fa1, a2, : : : , avg, based on the training data.

1. A is discrete-valued: In this case, the outcomes of the test at node Ncorrespond directly to the known values of A. A branch is created for each known value, aj, of A and labeled with that value (Figure 6.4(a)). Partition Djis the subset of class-labeled tuples in D having value aj of A. Because all of the tuples in a given partition have the same value for A, then A need not be considered in any future partitioning of the tuples. Therefore, it is removed from attribute list (steps 8 to 9).

2. A is continuous-valued: In this case, the test at node N has two possible outcomes, corresponding to the conditions A _ split point and A >split point, respectively, where split point is the split-point returned byAttribute selection method as part of the splitting criterion. (In practice, the split-point, a, is often taken as the midpoint of two known adjacent values ofA and therefore may not actually be a pre-existing value of A from the training data.) Two branches are grown from N and labeled

according to the above outcomes (Figure 6.4(b)). The tuples are partitioned such thatD1 holds the subset of class-labeled tuples inDforwhich A_split point,while D2 holds the rest.

3. A is discrete-valued and a binary treemust be produced (as dictated by the attribute

selection measure or algorithm being used): The test at node N is of the form “A 2 SA?”. SA is the splitting subset for A, returned by Attribute selection method as part of the splitting criterion. It is a subset of the known values ofA. If a given tuple has value aj of A and if aj 2 SA, then the test at node N is satisfied. Two branches are grown from N . By convention, the left branch out of N is labeled yes so that D1 corresponds to the subset of class-labeled tuples in Dthat satisfy the test. The right branch out of N is labeled no so that D2 corresponds to the subset of class-labeled tuples from D that do not satisfy the test.

The algorithm uses the same process recursively to form a decision tree for the tuples

at each resulting partition, Dj, of D (step 14).

The recursive partitioning stops only when any one of the following terminating conditions is true:

1. All of the tuples in partition D (represented at node N) belong to the same class

(steps 2 and 3), or

2. There are no remaining attributes on which the tuples may be further partitioned

(step 4). In this case, majority voting is employed (step 5). This involves converting node N into a leaf and labeling it with the most common class inD. Alternatively, the class distribution of the node tuples may be stored.

3. There are no tuples for a given branch, that is, a partition Dj is empty (step 12).

The above figure shows three possibilities for partitioning tuples based on the splitting criterion, shown with examples. Let A be the splitting attribute. (a) If A is discrete-valued, then one branch is grown for each known value of A. (b) If A is continuous-valued, then two branches are grown, corresponding toA _ split point and A > split point. (c) If A is discrete-valued and a binary tree must be produced, then the test is of the form A 2 SA, where SA is the splitting subset for A.

In this case, a leaf is created with the majority class in D (step 13).

The resulting decision tree is returned (step 15).

Attribute Selection Measures :

Attribute selection measures are used to select the attribute that best partitions the tuples into distinct classes.

An attribute selection measure is a heuristic for selecting the splitting criterion that “best” separates a given data partition, D, of class-labeled training tuples into individual classes. If we were to split D into smaller partitions according to the outcomes of the splitting criterion, ideally each partition would be pure (i.e., all of the tuples that fall into a given partition would belong to the same class).

Attribute selection measures are also known as splitting rules because they determine how the tuples at a given node are to be split.

The attribute having the best score for the measure6 is chosen as thesplitting attribute for the given tuples. If the splitting attribute is continuous-valued or if we are restricted to binary trees then, respectively, either a split point or a splitting subset must also be determined as part of the splitting criterion. The tree node created for partition D is labeled with the splitting criterion, branches are grown for each outcome of the criterion, and the tuples are partitioned accordingly.

Three popular attribute selection measures are

ü information gain,

ü gain ratio, and

ü gini index.

Information gain

ID3 uses information gain as its attribute selection measure. This measure is based on which studied the value or “information content” of messages.

Let node N represent or hold the tuples of partition D. The attribute with the highest information gain is chosen as the splitting attribute for nodeN.

The expected information needed to classify a tuple in D is given by

Info(D) is also known as the entropy of D.

Now, suppose we were to partition the tuples in D on some attribute Ahaving v distinct values, {a1, a2, : : : , av}, as observed from the training data. If A is discrete-valued, these values correspond directly to the v outcomes of a test on A. Attribute A can be used to split D into v partitions or subsets, {D1,D2, : : : , Dv},where Dj contains those tuples in D that have outcome aj of A. These partitions would correspond to the branches grown from node N.

The term |Dj | / |D| acts as the weight of the jth partition. InfoA(D) is the expected information required to classify a tuple from D based on the partitioning by A. The smaller the expected information (still) required, the greater the purity of the partitions.

Information gain is defined as the difference between the original information requirement

(i.e., based on just the proportion of classes) and the newrequirement (i.e., obtained after partitioning on A). That is,

Example : Induction of a decision tree using information gain. The following table presents a training set,

D, of class-labeled tuples randomly selected from the AllElectronics customer database.

The class label attribute, buys computer, has two distinct values (namely, fyes, nog); therefore, there are two distinct classes (that is, m = 2). Let class C1 correspond to yes and class C2 correspond to no. There are nine tuples of class yes and five tuples of class no. A (root) node N is created for the tuples in D. To find the splitting criterion for these tuples, we must compute the information gain of each attribute.

Table : Class-labeled training tuples from the AllElectronics customer database.

By using following equation to compute the expected information needed to classify a tuple in D:

Where Total number of tuple is 14 , Class = “Yes” are 9 and Class = “no” are 5 , Therefore

Info(D) = - Positive tuple( Yes) – Negative tuples ( no).

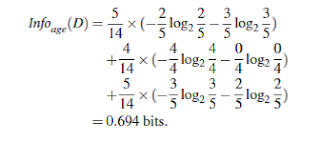

Next, we need to compute the expected information requirement for each attribute. Let’s start with the attribute age.We need to look at the distribution of yes and no tuples for each category of age. For the agecategory youth, there are two yes tuples and three no tuples. For the categorymiddle aged, there are four yes tuples and zero no tuples. For the categorysenior, there are three yes tuples and two no tuples. Using InfoA(D) equation, the expected information needed to classify a tuple in D if the tuples are partitioned according to age is

That is

InfoA(D) = Age tuple (Youth) * ( - Youth with “Yes” - Youth with “no” ) +

Age tuple (middle_aged) * ( - Middle_aged with “Yes” – Middle_aged with “no” ) +

Age tuple (senior) * ( - Senior with “Yes” - Senior with “no” )

Hence, the gain in information from such a partitioning would be,

Similarly, we can compute Gain(income) = 0.029 bits, Gain(student) = 0.151 bits, and Gain(credit rating) = 0.048 bits. Because age has the highest information gain among the attributes, it is selected as the splitting attribute. Node N is labeled with age, and branches are grown for each of the attribute’s values.

The attribute age has the highest information gain and therefore becomes the splitting attribute at the root node of the decision tree. Branches are grown for each outcome of age. The tuples are shown partitioned accordingly.

Suppose, instead, that we have an attribute A that is continuous-valued, rather than discrete-valued.

For such a scenario, we must determine the “best” split-point for A, where the split-point is a threshold on A. We first sort the values of A in increasing order. Typically, the midpoint between each

pair of adjacent values is considered as a possible split-point.

The point with the minimum expected information requirement for Ais selected as the split point for A. D1 is the set of tuples in D satisfying A <=split point, and D2 is the set of tuples in D satisfying A > =split point.

Gain ratio :

The information gain measure is biased toward tests with many outcomes. That is, it prefers to select attributes having a large number of values.

Therefore, the information gained by partitioning on this attribute is maximal. Clearly, such a partitioning is useless for classification.

C4.5, a successor of ID3, uses an extension to information gain known as gain ratio, which attempts to overcome this bias. It applies a kind of normalization to information gain using a “split information” value defined analogously with Info(D) as

This value represents the potential information generated by splitting the training data set, D, into v partitions, corresponding to the v outcomes of a test on attribute A.

It differs from information gain, which measures the information with respect to classification that is acquired based on the same partitioning. The gain ratio is defined as

The attribute with the maximum gain ratio is selected as the splitting attribute.

Example, Computation of gain ratio for the attribute income. A test onincome splits the data of Table 6.1 into three partitions, namely low, medium, and high, containing four, six, and four tuples, respectively. To compute the gain ratio of income, we first use Equation

we have Gain(income) = 0.029. Therefore, GainRatio(income) = 0.029/0.926 = 0.031.

Gini index :

The Gini index is used in CART. Using the notation described above, the Gini index

measures the impurity of D, a data partition or set of training tuples, as

where pi is the probability that a tuple in D belongs to class Ci and is estimated by

The Gini index considers a binary split for each attribute. Let’s first consider the case where A is a discrete-valued attribute having v distinct values, {a1, a2, : : : , av}, occurring in D. To determine the best binary split onA, we examine all of the possible subsets that can be formed using known values of A.

If A has v possible values, then there are 2v possible subsets. For example, if income has three possible values, namely { low, medium, high}, then the possible subsets are {low, medium, high},{low, medium},{low, high},{medium, high},{low}, {medium},{high}, and {}. We exclude the power set, {low, medium, high}, and the empty set from consideration since, conceptually, they do not represent a split. Therefore, there are 2v-2 possible ways to form two partitions of the data, D, based on a binary split on A.

For each attribute, each of the possible binary splits is considered. For a discrete-valued attribute, the subset that gives the minimum gini index for that attribute is selected as its splitting subset.

For continuous-valued attributes, each possible split-point must be considered. The strategy is similar to that described above for information gain, where the midpoint between each pair of (sorted) adjacent values is taken as a possible split-point.

The point giving the minimum Gini index for a given (continuous-valued) attribute is taken as the split-point of that attribute. Recall that for a possible split-point of A, D1 is the set of tuples in D satisfying A <= split point, and D2 is the set of tuples in D satisfying A > split point.

The reduction in impurity that would be incurred by a binary split on a discrete- or continuous-valued attribute A is

Similarly, the Gini index values for splits on the remaining subsets are: 0.315 (for the subsets {low, high} and {medium}) and 0.300 (for the subsets {medium, high} and {low}). Therefore, the best binary split for attribute income is on {medium, high} (or {low}) because it minimizes the gini index.

Many other attribute selection measures have been proposed. CHAID, a decision tree algorithm that is popular in marketing, uses an attribute selection measure that is based on the statistical c2 test for independence. Other measures include C-SEP (which performs better than information gain and Gini index in certain cases) and G-statistic (an information theoretic measure that is a close approximation to c2 distribution).

Attribute selection measures based on the Minimum Description Length (MDL) principle have the least bias toward multivalued attributes. MDL-based measures use encoding techniques to define the “best” decision tree as the one that requires the fewest number of bits to both (1) encode the tree and (2) encode the exceptions to the tree (i.e., cases that are not correctly classified by the tree). Its main idea is that the simplest of solutions is preferred.

Other attribute selection measures consider multivariate splits (i.e., where the partitioning of tuples is based on a combination of attributes, rather than on a single attribute). The CART system, for example, can find multivariate splits based on a linear combination of attributes. Multivariate splits are a form of attribute (or feature) construction, where new attributes are created based on the existing ones.

Tree Pruning :

When a decision tree is built, many of the branches will reflect anomalies in the training data due to noise or outliers. Tree pruning methods address this problem of overfitting the data.

Pruned trees tend to be smaller and less complex and, thus, easier to comprehend. They are usually faster and better at correctly classifying independent test data (i.e., of previously unseen tuples) than unpruned trees.

“How does tree pruning work?” There are two common approaches to tree pruning:

ü prepruning and

ü postpruning.

In the prepruning approach, a tree is “pruned” by halting its construction early (e.g., by deciding not to further split or partition the subset of training tuples at a given node).

When constructing a tree, measures such as statistical significance, information gain, Gini index, and so on can be used to assess the goodness of a split.

The second and more common approach is postpruning, which removes subtrees from a “fully grown” tree. A subtree at a given node is pruned by removing its branches and replacing it with a leaf. The leaf is labeled with the most frequent class among the subtree being replaced.

C4.5 uses a method called pessimistic pruning, which is similar to the cost complexity method in that it also uses error rate estimates to make decisions regarding subtree pruning. Pessimistic pruning, however, does not require the use of a prune set. Instead, it uses the training set to estimate error rates.

Scalability and Decision Tree Induction :

More recent decision tree algorithms that address the scalability issue have been proposed. Algorithms for the induction of decision trees from very large training sets include SLIQ and SPRINT, both of which can handle categorical and continuous valued attributes.

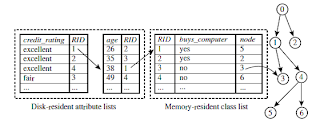

SLIQ employs disk-resident attribute lists and a single memory-resident class list. The attribute lists and class list generated by SLIQ for the tuple data of Table

Table for tuple data for the class buys computer.

Figure Attribute list and class list data structures used in SLIQ for the tuple data of above table

Table attribute list data structure used in SPRINT for the tuple data of above table.

The use of data structures to hold aggregate information regarding the training data are one approach to improving the scalability of decision tree induction.

While both SLIQ and SPRINT handle disk-resident data sets that are too large to fit into memory, the scalability of SLIQis limited by the use of its memory-resident data structure.

The method maintains an AVC-set (where AVC stands for “Attribute-Value, Classlabel”) for each attribute, at each tree node, describing the training tuples at the node.

BOAT (Bootstrapped Optimistic Algorithm for Tree Construction) is a decision tree algorithm that takes a completely different approach to scalability—it is not based on the use of any special data structures. Instead, it uses a statistical technique known as “bootstrapping ” to create several smaller samples (or subsets) of the given training data, each of which fits in memory.

No comments:

Post a Comment